當(dāng)前,將AI或深度學(xué)習(xí)算法(如分類、目標(biāo)檢測和軌跡追蹤)部署到嵌入式設(shè)備,進(jìn)而實(shí)現(xiàn)邊緣計(jì)算,正成為輕量級(jí)深度學(xué)習(xí)算法發(fā)展的一個(gè)重要趨勢(shì)。今天將與各位小伙伴分享一個(gè)實(shí)際案例:在ELF 1開發(fā)板上成功部署深度學(xué)習(xí)模型的項(xiàng)目,該項(xiàng)目能夠?qū)崟r(shí)讀取攝像頭視頻流并實(shí)現(xiàn)對(duì)畫面中的物體進(jìn)行精準(zhǔn)的目標(biāo)檢測。

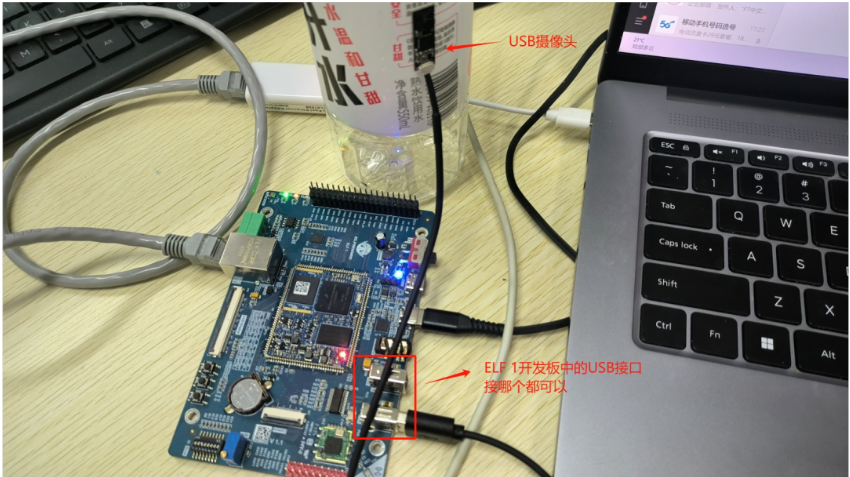

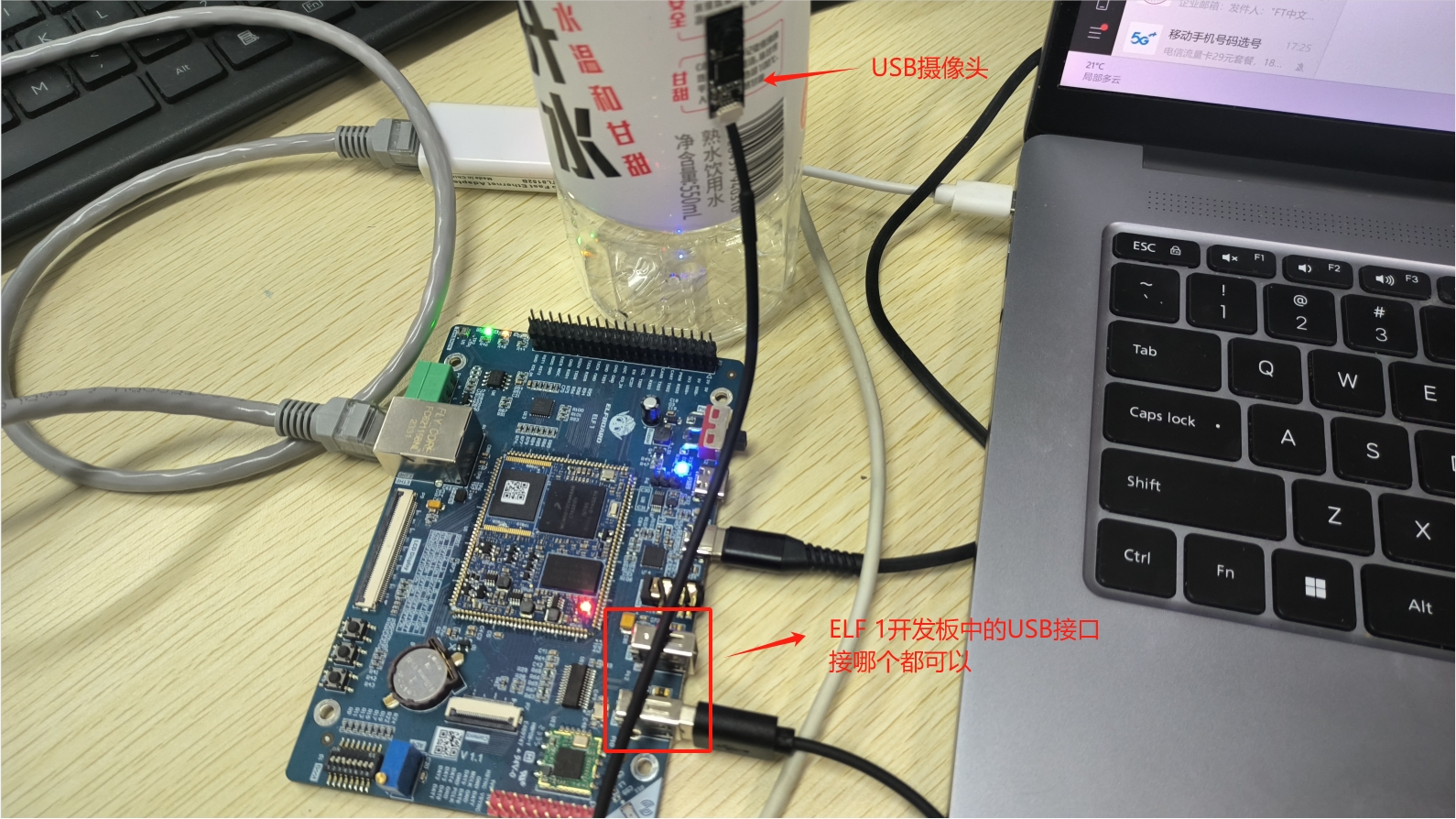

項(xiàng)目所需的硬件設(shè)備:1、基于NXP(恩智浦)i.MX6ULL的ELF 1開發(fā)板,2、網(wǎng)線,3、USB攝像頭。

獲取開發(fā)板攝像頭文件路徑

本次項(xiàng)目開發(fā)使用的為普通的USB攝像頭,將攝像頭插在開發(fā)板任一USB口均可。

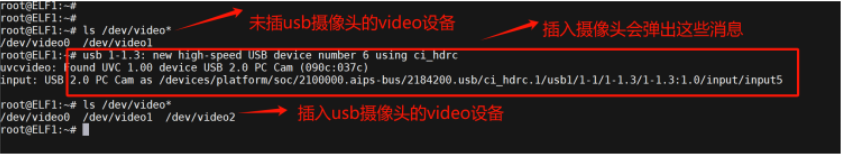

在Linux開發(fā)板中使用USB攝像頭,通常會(huì)涉及到一些基本的命令行操作。這些操作主要是通過 Video4Linux (V4L2)內(nèi)核框架API進(jìn)行的。以下是一些常用的命令和概念:

1. 列出所有攝像頭設(shè)備: 使用 ls /dev/video* 命令可以列出所有已連接的視頻設(shè)備。這些設(shè)備通常顯示為 /dev/video0 , /dev/video1 等。如下圖,開發(fā)板中對(duì)應(yīng)的攝像頭為/dev/video2(插入哪個(gè)USB口都是一樣的)。

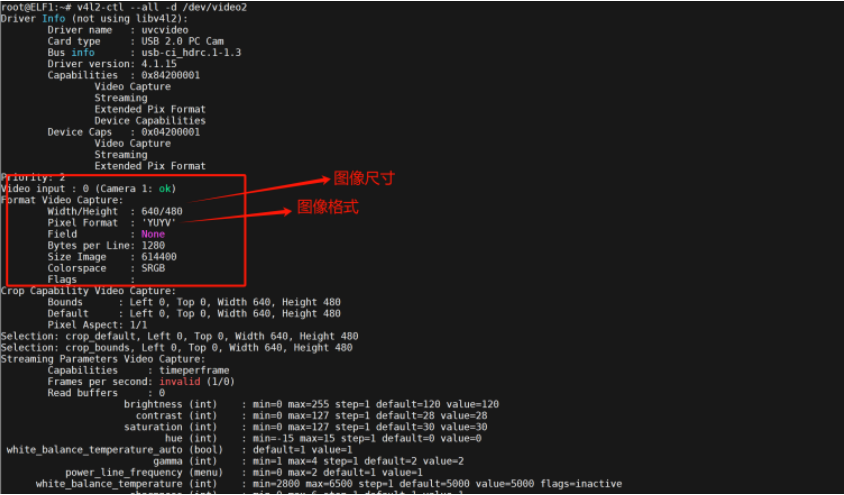

2. 查看攝像頭信息: 使用v4l2-ctl --all -d /dev/video2可以查看特定攝像頭(例如/dev/video2)的所有信息,包括支持的格式、幀率等。可以看到圖象尺寸為640×480,為了和后續(xù)的目標(biāo)檢測輸入圖像大小匹配,需要在程序中進(jìn)行resize。

編寫程序,讀取取攝像頭視頻進(jìn)行檢測

并傳遞檢測結(jié)果到上位機(jī)

編寫在開發(fā)板中運(yùn)行的程序,開發(fā)板中運(yùn)行的程序主要有兩個(gè)功能:1. 讀取攝像頭捕捉的視頻并進(jìn)行檢測,2. 將檢測結(jié)果通過網(wǎng)絡(luò)通信傳遞到上位機(jī)中。

首先是第一個(gè)功能,因?yàn)橐贿呉x取視頻,一邊要進(jìn)行圖片檢測,為了提高檢測速度,使用多線程來編寫相應(yīng)的程序。線程間通訊采用隊(duì)列,為避免多線程間的競態(tài),在訪問共享資源時(shí)需要添加互斥鎖。

第二個(gè)功能,采用socket通信,將檢測后的圖像發(fā)送到上位機(jī)中即可。

下面是完整的程序?qū)崿F(xiàn):

/*命名為 squeezenetssd_thread.cpp */ #include "net.h" #include

#include

#include #include #include #include #include #include

#include /*增加多線程代碼*/ #include #include #include #include /*隊(duì)列通信,全局變量*/ std::queue frameQueue; std::mutex queueMutex; std::condition_variable queueCondVar; bool finished = false; const size_t MAX_QUEUE_SIZE = 2; // 設(shè)為兩個(gè),因?yàn)闄z測速度實(shí)在太慢,多了意義不大

struct Object { cv::Rect_ rect; int label; float prob; }; ncnn::Net squeezenet; int client_sock; static int detect_squeezenet(const cv::Mat& bgr, std::vector& objects)

{ const int target_size = 300; int img_w = bgr.cols; int img_h = bgr.rows; ncnn::Mat in = ncnn::Mat::from_pixels_resize(bgr.data, ncnn::Mat::PIXEL_BGR, bgr.cols, bgr.rows, target_size, target_size);

const float mean_vals[3] = {104.f, 117.f, 123.f};

in.substract_mean_normalize(mean_vals, 0); ncnn::Extractor ex = squeezenet.create_extractor(); ex.input("data", in); ncnn::Mat out; ex.extract("detection_out", out); // printf("%d %d %d\n", out.w, out.h, out.c); objects.clear(); for (int i = 0; i < out.h; i++)?

{ const float* values = out.row(i); Object object; object.label = values[0]; object.prob = values[1];

object.rect.x = values[2] * img_w; object.rect.y = values[3] * img_h; object.rect.width = values[4] * img_w - object.rect.x; object.rect.height = values[5] * img_h - object.rect.y; objects.push_back(object); } return 0; } static void draw_objects(cv::Mat& bgr, const std::vector& objects)

{ static const char* class_names[] = {"background", "aeroplane", "bicycle", "bird", "boat", "bottle", "bus", "car", "cat", "chair", "cow", "diningtable", "dog", "horse", "motorbike", "person", "pottedplant", "sheep", "sofa", "train", "tvmonitor" }; //cv::Mat image = bgr.clone(); //cv::Mat& image = bgr; for (size_t i = 0; i < objects.size(); i++) { const Object& obj = objects[i];?

fprintf(stderr, "%d = %.5f at %.2f %.2f %.2f x %.2f\n", obj.label, obj.prob, obj.rect.x, obj.rect.y, obj.rect.width, obj.rect.height);

cv::rectangle(bgr, obj.rect, cv::Scalar(255, 0, 0)); char text[256];

sprintf(text, "%s %.1f%%", class_names[obj.label], obj.prob * 100); int baseLine = 0; cv::Size label_size = cv::getTextSize(text, cv::FONT_HERSHEY_SIMPLEX, 0.5, 1, &baseLine); int x = obj.rect.x; int y = obj.rect.y - label_size.height - baseLine; if (y < 0) y = 0; if (x + label_size.width > bgr.cols) x = bgr.cols - label_size.width; cv::rectangle(bgr, cv::Rect(cv::Point(x, y), cv::Size(label_size.width, label_size.height + baseLine)), cv::Scalar(255, 255, 255), -1); cv::putText(bgr, text, cv::Point(x, y + label_size.height), cv::FONT_HERSHEY_SIMPLEX, 0.5, cv::Scalar(0, 0, 0)); } // cv::imshow("image", image); // cv::waitKey(0); } void send_to_client(const cv::Mat& image, int client_sock) { std::vector buffer; std::vector params = {cv::IMWRITE_JPEG_QUALITY, 80}; cv::imencode(".jpg", image, buffer, params); uint32_t len = htonl(buffer.size()); send(client_sock, &len, sizeof(len), 0);

send(client_sock, buffer.data(), buffer.size(), 0); } /*線程1工作函數(shù),此線程是用來采集相機(jī)圖像的*/ static void captureThreadFunction(cv::VideoCapture& cap) { while (true) { cv::Mat frame; cap >> frame; if (frame.empty()) { finished = true; queueCondVar.notify_all(); break; } cv::rotate(frame, frame, cv::ROTATE_90_COUNTERCLOCKWISE); // 圖像旋轉(zhuǎn)90度 std::unique_lock lock(queueMutex); if (frameQueue.size() >= MAX_QUEUE_SIZE) { frameQueue.pop(); // 丟棄最舊的幀

} frameQueue.push(frame); queueCondVar.notify_one(); } } /* 線程2工作函數(shù),此線程是用來檢測圖像*/

void processThreadFunction() { int frameCount = 0; while (true) { cv::Mat frame; { std::unique_lock lock(queueMutex); queueCondVar.wait(lock, []{ return !frameQueue.empty() || finished; }); if (finished && frameQueue.empty()) { // 退出前釋放鎖 return; // 使用 return 替代 break 來確保在持有鎖時(shí)不退出循環(huán)

} frame = frameQueue.front(); frameQueue.pop(); } // 鎖在這里被釋放 // 檢測代碼...

std::vector objects; // if (++frameCount % 5 == 0) { // detect_squeezenet(frame, objects); // frameCount = 0; // } detect_squeezenet(frame,objects); draw_objects(frame, objects); send_to_client(frame, client_sock); if (cv::waitKey(1) >= 0)

{ break; } } } int main() { int server_sock = socket(AF_INET, SOCK_STREAM, 0); if (server_sock < 0)?

{ perror("socket 創(chuàng)建失敗"); return -1; } struct sockaddr_in server_addr; server_addr.sin_family = AF_INET; server_addr.sin_port = htons(12345); server_addr.sin_addr.s_addr = INADDR_ANY; if (bind(server_sock, (struct sockaddr*)&server_addr, sizeof(server_addr)) < 0)?

{ perror("bind 失敗"); close(server_sock); return -1; } if (listen(server_sock, 1) < 0) { perror("listen 失敗");

close(server_sock); return -1; } printf("等待客戶端連接...\n"); struct sockaddr_in client_addr; socklen_t client_len = sizeof(client_addr); client_sock = accept(server_sock, (struct sockaddr*)&client_addr, &client_len); if (client_sock < 0) { perror("accept 失敗"); close(server_sock); return -1; }?

printf("客戶端已連接\n"); // ... [模型加載和初始化代碼] ... cv::VideoCapture cap("/dev/video2");

if (!cap.isOpened()) { fprintf(stderr, "攝像頭打開失敗\n"); return -1; } squeezenet.opt.use_vulkan_compute = true; // original pretrained model from https://github.com/chuanqi305/SqueezeNetSSD // squeezenet_ssd_voc_deploy.prototxt // https://drive.google.com/open?id=0B3gersZ2cHIxdGpyZlZnbEQ5Snc // the ncnn model https://github.com/nihui/ncnn-assets/tree/master/models if (squeezenet.load_param("squeezenet_ssd_voc.param")) exit(-1);

if (squeezenet.load_model("squeezenet_ssd_voc.bin")) exit(-1);

std::thread captureThread(captureThreadFunction, std::ref(cap)); std::thread processThread(processThreadFunction); captureThread.join(); processThread.join(); cap.release(); close(client_sock); close(server_sock); return 0; }

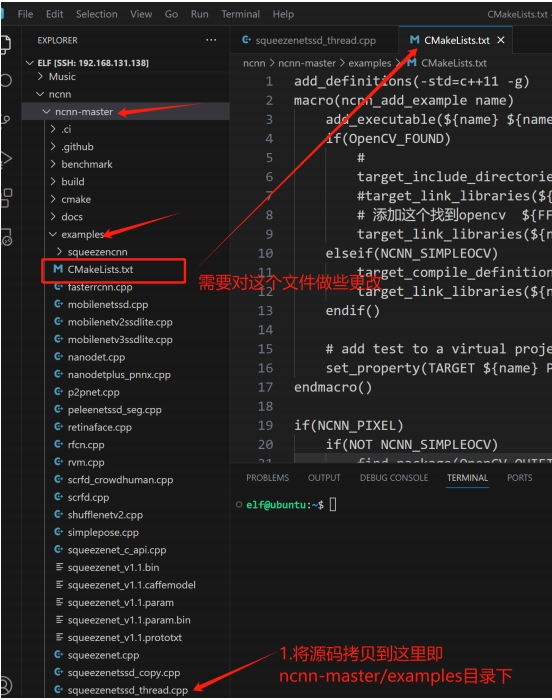

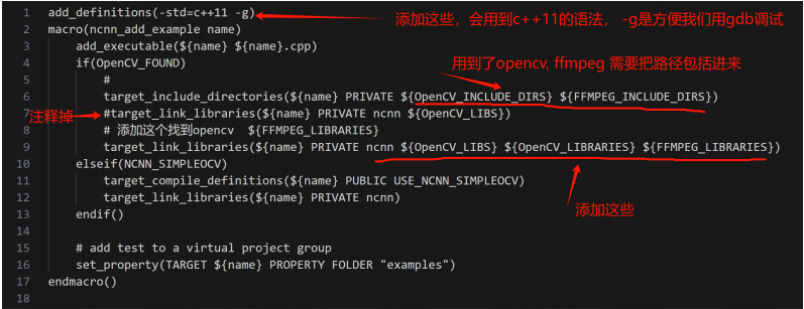

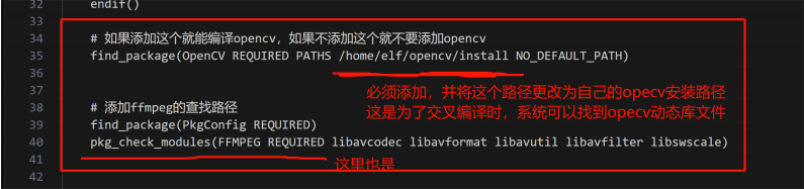

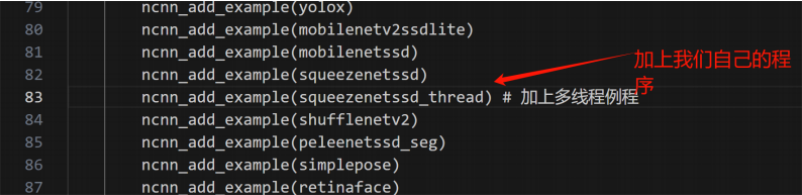

將上述程序,拷貝到ncnn目錄下,并更改CMakeLists.txt文件。

1、拷貝程序

2、更改CMakeLists.txt文件

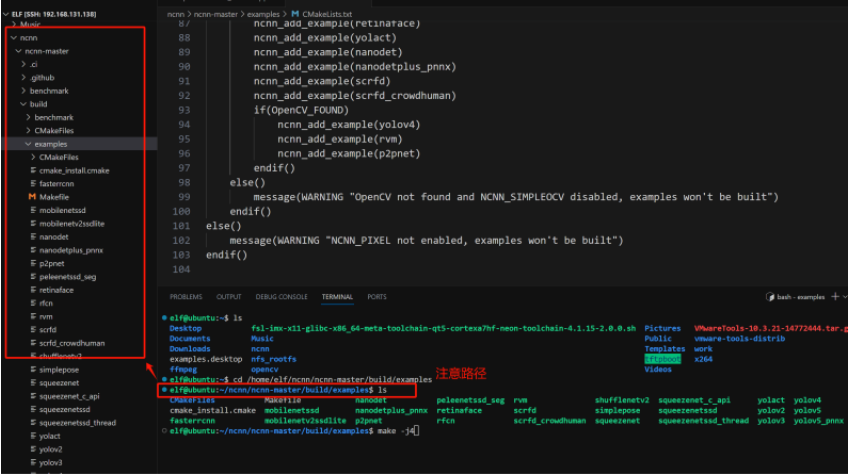

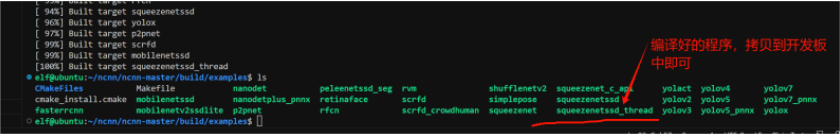

做好以上工作后,我們直接進(jìn)入ncnn-master/build/examples/ 文件夾下進(jìn)行編譯。編譯之前直接切換到ncnn-master/build 目錄輸入:

cmake -DCMAKE_TOOLCHAIN_FILE=../toolchains/arm-linux-gnueabihf.toolchain.cmake - DNCNN_SIMPLEOCV=ON -DNCNN_BUILD_EXAMPLES=ON -DCMAKE_BUILD_TYPE=Release ..

然后切換到ncnn-master/build/examples/ 目錄下輸入 make -j4 即可。

可見編譯成功,拷貝到開發(fā)板中就行。

編寫上位機(jī)軟件

上位機(jī)軟件較為簡單,使用ChatGPT生成源碼即可,下面附上源碼:

import socket import cv2 import numpy as np client_socket = socket.socket(socket.AF_INET, socket.SOCK_STREAM) client_socket.connect(('192.168.0.232', 12345)) # Connect to the server while True: # Receive size of the frame size = client_socket.recv(4) size = int.from_bytes(size, byteorder='big') # Receive the frame buffer = b'' while len(buffer) < size: buffer += client_socket.recv(size - len(buffer)) # Decode and display the frame frame = np.frombuffer(buffer, dtype=np.uint8) frame = cv2.imdecode(frame, cv2.IMREAD_COLOR) cv2.imshow('Received Frame', frame) if cv2.waitKey(1) & 0xFF == ord('q'): break client_socket.close() cv2.destroyAllWindows()

-

嵌入式

+關(guān)注

關(guān)注

5086文章

19143瀏覽量

306044 -

Linux

+關(guān)注

關(guān)注

87文章

11316瀏覽量

209814 -

攝像頭

+關(guān)注

關(guān)注

60文章

4850瀏覽量

95884 -

開發(fā)板

+關(guān)注

關(guān)注

25文章

5080瀏覽量

97678

發(fā)布評(píng)論請(qǐng)先 登錄

相關(guān)推薦

ElfBoard技術(shù)貼|ELF 1開發(fā)板適配攝像頭詳解

基于FPGA的攝像頭心率檢測裝置設(shè)計(jì)

在ELF 1開發(fā)板上實(shí)現(xiàn)讀取攝像頭視頻進(jìn)行目標(biāo)檢測 #嵌入式技術(shù)應(yīng)用 #Linux開發(fā)板 #開發(fā)板學(xué)習(xí)

基于TI AM437x 創(chuàng)龍開發(fā)板的OV2659攝像頭模塊測試

通過Dragonboard 410c開發(fā)板USB攝像頭進(jìn)行移動(dòng)偵測

【FPGA DEMO】Lab 4:攝像頭HDMI顯示(高速--HDMI&攝像頭)

【合宙Air105開發(fā)板試用體驗(yàn)】Ari105開發(fā)板開箱,及攝像頭使用

一鍵實(shí)現(xiàn)V853開發(fā)板攝像頭自由

在RK3288開發(fā)板安卓5.1上實(shí)現(xiàn)虛擬攝像頭

【ELF 1開發(fā)板試用】+ 3.2 USB攝像頭連接測試 + Ubutu SSH連接

【ELF 1開發(fā)板試用】板載資源測試3:OV5640 攝像頭測試

基于Dragonboard 410c開發(fā)板的USB攝像頭圖像保存實(shí)現(xiàn)

迅為RK3568開發(fā)板Debian系統(tǒng)使用python 進(jìn)行攝像頭開發(fā)

項(xiàng)目分享|基于ELF 1開發(fā)板的遠(yuǎn)程監(jiān)測及人臉識(shí)別項(xiàng)目

在ELF 1 開發(fā)板上實(shí)現(xiàn)讀取攝像頭視頻進(jìn)行目標(biāo)檢測

在ELF 1 開發(fā)板上實(shí)現(xiàn)讀取攝像頭視頻進(jìn)行目標(biāo)檢測

評(píng)論