陰影

在光柵化中處理陰影非常不直觀,需要相當多的運算:需要從每條光線的視角渲染場景,再存儲在紋理中,隨后在光照階段再次投射。更糟糕的是,這樣做未必會產生優質的圖像質量:那些陰影很容易混疊(因為光線所視的像素與攝像頭所視的像素并不對應),或堵塞(因為暗影貼圖的紋素存儲的是單一的深度值,但卻可以覆蓋大部分區域。此外,大多數光柵化需要支持專門的陰影貼圖“類型“,如立方體貼圖陰影(用于無方向性的光線),或級聯陰影貼圖(用于大型戶外場景),而這大大增加了渲染器的復雜性。

在光線追蹤器中,單一的代碼路徑可以處理所有的陰影場景。更重要的是,投影過程簡單直觀,與光線從曲面投向光源及檢查光線是否受阻一樣。PowerVR光線跟蹤架構呈現了快速的“試探”光線,其用于檢測光線投射方向的幾何圖形,這也使得它們特別適合進行有效的陰影渲染。

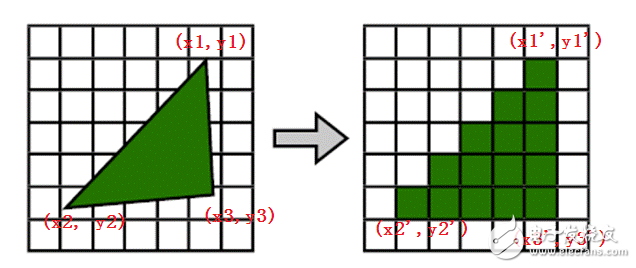

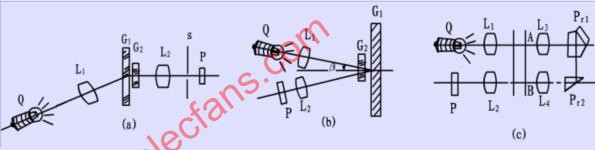

一、先了解下 什么是光柵化及光柵化的簡單過程?

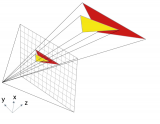

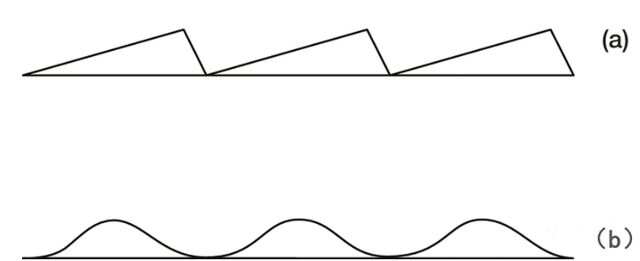

光柵化是將幾何數據經過一系列變換后最終轉換為像素,從而呈現在顯示設備上的過程,如下圖:

光柵化的本質是坐標變換、幾何離散化,如下圖:

有關光柵化過程的詳細內容有空再補充。

二、以下內容展示紋素到像素時的一些細節:

When rendering 2D output using pre-transformed vertices, care must be taken to ensure that each texel area correctly corresponds to a single pixel area, otherwise texture distortion can occur. By understanding the basics of the process that Direct3D follows when rasterizing and texturing triangles, you can ensure your Direct3D application correctly renders 2D output.

當使用已經執行過頂點變換的頂點作為2D輸出平面的時候,我們必須確保每個紋素正確的映射到每個像素區域,否則紋理將產生扭曲,通過理解Direct3D在光柵化和紋理采樣作遵循的基本過程,你可以確保你的Direct3D程序正確的輸出一個2D圖像。

圖1: 6 x 6 resolution display

Figure 1 shows a diagram wherein pixels are modeled as squares. In reality, however, pixels are dots, not squares. Each square in Figure 1 indicates the area lit by the pixel, but a pixel is always just a dot at the center of a square. This distinction, though seemingly small, is important. A better illustration of the same display is shown in Figure 2:

圖片1展示了用一個方塊來描述像素的。實際上,像素是點,不是方塊,每個圖片1中的方塊表明了被一個像素點亮的區域,然而像素始終是方塊中間的一個點,這個區別,看起來很小,但是很重要。圖片二展示了一種更好的描述方式。

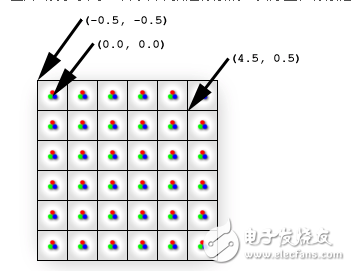

圖 2: Display is composed of pixels

This diagram correctly shows each physical pixel as a point in the center of each cell. The screen space coordinate (0, 0) is located directly at the top-left pixel, and therefore at the center of the top-left cell. The top-left corner of the display is therefore at (-0.5, -0.5) because it is 0.5 cells to the left and 0.5 cells up from the top-left pixel. Direct3D will render a quad with corners at (0, 0) and (4, 4) as illustrated in Figure 3.

這張圖正確地通過一個點來描述每個單元中央的物理像素。屏幕空間的坐標原點(0,0)是位于左上角的像素,因此就在最左上角的方塊的中央。最左上角方塊的最左上角因此是(-0.5,-0.5),因為它距最左上角的像素是(-0.5,-0.5)個單位。Direct3D將會在(0,0)到(4,4)的范圍內渲染一個矩形,如圖3所示

圖3

Figure 3 shows where the mathematical quad is in relation to the display, but does not show what the quad will look like once Direct3D rasterizes it and sends it to the display. In fact, it is impossible for a raster display to fill the quad exactly as shown because the edges of the quad do not coincide with the boundaries between pixel cells. In other words, because each pixel can only display a single color, each pixel cell is filled with only a single color; if the display were to render the quad exactly as shown, the pixel cells along the quad‘s edge would need to show two distinct colors: blue where covered by the quad and white where only the background is visible.

Instead, the graphics hardware is tasked with determining which pixels should be filled to approximate the quad. This process is called rasterization, and is detailed inRasterization Rules. For this particular case, the rasterized quad is shown in Figure 4:

圖片3展示了數學上應該顯示的矩形。但是并不是Direct3D光柵化之后的樣子。實際上,像圖3這樣光柵化是根本不可能的,因為每個像素點亮區域只能是一種顏色,不可能一半有顏色一半沒有顏色。如果可以像上面這樣顯示,那么矩形邊緣的像素區域必須顯示兩種不同的顏色:藍色的部分表示在矩形內,白色的部分表示在矩形外。

因此,圖形硬件將會執行判斷哪個像素應該被點亮以接近真正的矩形的任務。這個過程被稱之為光柵化,詳細信息請查閱Rasterization Rules.。對于我們這個特殊的例子,光柵化后的結果如圖4所示

圖4

Note that the quad passed to Direct3D (Figure 3) has corners at (0, 0) and (4, 4), but the rasterized output (Figure 4) has corners at (-0.5,-0.5) and (3.5,3.5)。 Compare Figures 3 and 4 for rendering differences. You can see that what the display actually renders is the correct size, but has been shifted by -0.5 cells in the x and y directions. However, except for multi-sampling techniques, this is the best possible approximation to the quad. (See theAntialias Sample for thorough coverage of multi-sampling.) Be aware that if the rasterizer filled every cell the quad crossed, the resulting area would be of dimension 5 x 5 instead of the desired 4 x 4.

If you assume that screen coordinates originate at the top-left corner of the display grid instead of the top-left pixel, the quad appears exactly as expected. However, the difference becomes clear when the quad is given a texture. Figure 5 shows the 4 x 4 texture you’ll map directly onto the quad.

注意我們傳給Direct3D(圖三)的兩個角的坐標為(0,0)和(4,4)(相對于物理像素坐標)。但是光柵化后的輸出結果(圖4)的兩個角的坐標為(-0.5,-0.5)和(3.5,3.5)。比較圖3和圖4,的不同之處。你可以看到圖4的結果才是正確的矩形大小。但是在x,y方向上移動了-0.5個像素矩形單位。然而,拋開multi-sampling技術,這是接近真實大小矩形的最好的光柵化方法。注意如果光柵化過程中填充所有被覆蓋的物理像素的像素區域,那么矩形區域將會是5x5,而不是4x4.

如果你結社屏幕坐標系的原點在最左上角像素區域的最左上角,而不是最左上角的物理像素,這個方塊顯示出來和我們想要的一樣。然而當我們給定一個紋理的時候,區別就顯得異常突出了,圖5 展示了一個用于映射到我們的矩形的4x4的紋理。

圖5

Because the texture is 4 x 4 texels and the quad is 4 x 4 pixels, you might expect the textured quad to appear exactly like the texture regardless of the location on the screen where the quad is drawn. However, this is not the case; even slight changes in position influence how the texture is displayed. Figure 6 illustrates how a quad between (0, 0) and (4, 4) is displayed after being rasterized and textured.

因為紋理有4x4個紋素,并且矩形是4x4個像素,你可能想讓紋理映射后的矩形就像紋理圖一樣。然而,事實上并非如此,一個位置點的輕微變化也會影響貼上紋理后的樣子,圖6闡釋了一個(0,0)(4,4)的矩形被光柵化和紋理映射后的樣子。

圖6

The quad drawn in Figure 6 shows the textured output (with a linear filtering mode and a clamp addressing mode) with the superimposed rasterized outline. The rest of this article explains exactly why the output looks the way it does instead of looking like the texture, but for those who want the solution, here it is: The edges of the input quad need to lie upon the boundary lines between pixel cells. By simply shifting the x and y quad coordinates by -0.5 units, texel cells will perfectly cover pixel cells and the quad can be perfectly recreated on the screen. (Figure 8 illustrates the quad at the corrected coordinates.)

圖6中展示了貼上紋理后的矩形(使用線性插值模式和CLAMP尋址模式),文中剩下的部分將會解釋為什么他看上去是這樣而不像我們的紋理圖。先提供一個解決這個問題的方法:輸入的矩形的邊界線需要位于兩個像素區域之間。通過簡單的將x和y值移動-0.5個像素區域單位,紋素將會完美地覆蓋到矩形區域并且在屏幕上重現(圖8闡釋了這個完美覆蓋的正確的坐標)(譯者:這里你創建的窗口的坐標必須為整數,因此位于像素區域的中央,你的客戶區屏幕最左像素區域的邊界線在沒有進行移位-0.5之前也必位于某個像素區域的中央)

The details of why the rasterized output only bears slight resemblance to the input texture are directly related to the way Direct3D addresses and samples textures. What follows assumes you have a good understanding oftexture coordinate space And bilinear texture filtering.

關于為什么光柵化和紋理映射出來的圖像只有一點像我們的原始紋理圖的原因和Direct3D紋理選址模式和過濾模式有關。

Getting back to our investigation of the strange pixel output, it makes sense to trace the output color back to the pixel shader: The pixel shader is called for each pixel selected to be part of the rasterized shape. The solid blue quad depicted in Figure 3 could have a particularly simple shader:

回到我們調查為什么會輸出奇怪像素的過程中,為了追蹤輸出的顏色,我們看看像素著色器:像素作色器在光柵后的圖形中的每個像素都會被調用一次。圖3中藍色的線框圍繞的矩形區域都會使用一個簡單的作色器:

float4 SolidBluePS() : COLOR

{

return float4( 0, 0, 1, 1 );

}

For the textured quad, the pixel shader has to be changed slightly:

texture MyTexture;

sampler MySampler =

sampler_state

{

Texture = 《MyTexture》;

MinFilter = Linear;

MagFilter = Linear;

AddressU = Clamp;

AddressV = Clamp;

};

float4 TextureLookupPS( float2 vTexCoord : TEXCOORD0 ) : COLOR

{

return tex2D( MySampler, vTexCoord );

}

That code assumes the 4 x 4 texture of Figure 5 is stored in MyTexture. As shown, the MySampler texture sampler is set to perform bilinear filtering on MyTexture. The pixel shader gets called once for each rasterized pixel, and each time the returned color is the sampled texture color at vTexCoord. Each time the pixel shader is called, the vTexCoord argument is set to the texture coordinates at that pixel. That means the shader is asking the texture sampler for the filtered texture color at the exact location of the pixel, as detailed in Figure 7:

代碼假設圖5中的4x4的紋理存儲在MyTexture中。MySampler被設置成雙線性過濾。光柵化每個像素的時候調用一次這個Shader.每次返回的顏色值都是對sampled texture使用vTexCoord取樣的結果,vTexCoord是物理像素值處的紋理坐標。這意味著在每個像素的位置都會查詢紋理以得到這點的顏色值。詳情如圖7所示:

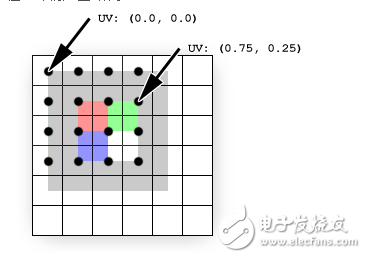

圖7

The texture (shown superimposed) is sampled directly at pixel locations (shown as black dots)。 Texture coordinates are not affected by rasterization (they remain in the projected screen-space of the original quad)。 The black dots show where the rasterization pixels are. The texture coordinates at each pixel are easily determined by interpolating the coordinates stored at each vertex: The pixel at (0,0) coincides with the vertex at (0, 0); therefore, the texture coordinates at that pixel are simply the texture coordinates stored at that vertex, UV (0.0, 0.0)。 For the pixel at (3, 1), the interpolated coordinates are UV (0.75, 0.25) because that pixel is located at three-fourths of the texture‘s width and one-fourth of its height. These interpolated coordinates are what get passed to the pixel shader.

紋理(重疊上的區域)是在物理像素的位置采樣的(黑點)。紋理坐標不會受光柵化的影響(它們被保留在投影到屏幕空間的原始坐標中)黑點是光柵化的物理像素點的位置。每個像素點的紋理坐標值可以通過簡單的線性插值得到:頂點(0,0)就是物理像素(0,0)UV是(0.0,0.0)。像素(3,1)紋理坐標是UV(0.75,0.25)因為像素值是在3/4 紋理寬度和1/4紋理高度的位置上。這些插過值的紋理坐標被傳遞給了像素著色器。

The texels do not line up with the pixels in this example; each pixel (and therefore each sampling point) is positioned at the corner of four texels. Because the filtering mode is set to Linear, the sampler will average the colors of the four texels sharing that corner. This explains why the pixel expected to be red is actually three-fourths gray plus one-fourth red, the pixel expected to be green is one-half gray plus one-fourth red plus one-fourth green, and so on.

每個紋素并不和每個像素重疊,每個像素都在4個紋素的中間。因為過濾模式是雙線性。過濾器將會取像素周圍4個顏色的平均值。這解釋了為什么我們想要的紅色實際上確是3/4的灰色加上1/4的紅色。應該是綠色的像素點是1/2的灰色加上1/4的紅色加上1/4的綠色等等。

To fix this problem, all you need to do is correctly map the quad to the pixels to which it will be rasterized, and thereby correctly map the texels to pixels. Figure 8 shows the results of drawing the same quad between (-0.5, -0.5) and (3.5, 3.5), which is the quad intended from the outset.

為了修正這個問題,你需要做的就是正確的將矩形映射到像素,然后正確地映射紋素到像素。圖8顯示了將(-0.5, -0.5) and (3.5, 3.5)的矩形進行紋理映射后的結果。

圖8

Summary

In summary, pixels and texels are actually points, not solid blocks. Screen space originates at the top-left pixel, but texture coordinates originate at the top-left corner of the texture’s grid. Most importantly, remember to subtract 0.5 units from the x and y components of your vertex positions when working in transformed screen space in order to correctly align texels with pixels.

總結:

總的來說,像素和紋素實際上是點,不是實體的塊。屏幕空間原點是左上角的物理像素,但是紋理坐標原點是紋素矩形的最左上角。最重要的是,記住當你要將紋理中的紋素正確的映射到屏幕空間中的像素時,你需要減去0.5個單位

發布評論請先 登錄

相關推薦

如何評價光柵化渲染中光線在場景中的折返?

Littrow結構中光柵系統的配置與優化

測定衍射光柵的光柵常數

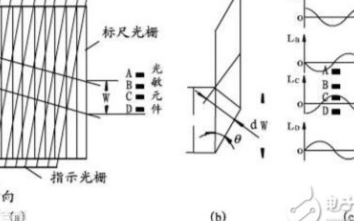

光柵的構造,光柵尺的構造和種類,光柵讀數頭

波導光柵,波導光柵原理什么?

光纖光柵,光纖光柵是什么意思

實時光線的混合渲染:光線追蹤VS光柵化

基于FPGA的光柵傳感器信號處理電路研究

光柵尺是什么_光柵尺的工作原理

為什么要光柵化?怎么實現光柵化方法?

光柵傳感器的應用_光柵傳感器選型指南

安全光柵和安全光幕是什么,有什么區別

3D渲染——光柵化渲染原理解析

什么是光柵化 光柵化中陰影的處理

什么是光柵化 光柵化中陰影的處理

評論